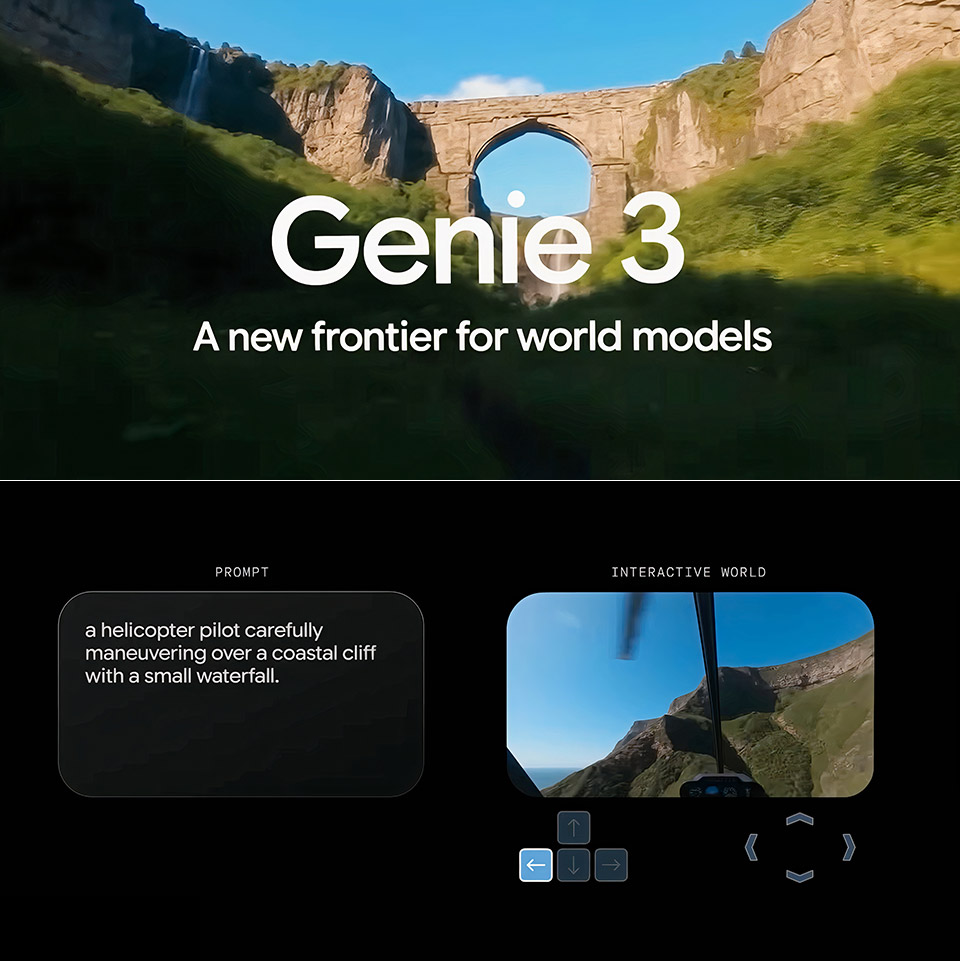

Genie 3 world model from DeepMind creates interactive 3D environments in real-time from text prompts — a major leap toward AGI and AI agent training.

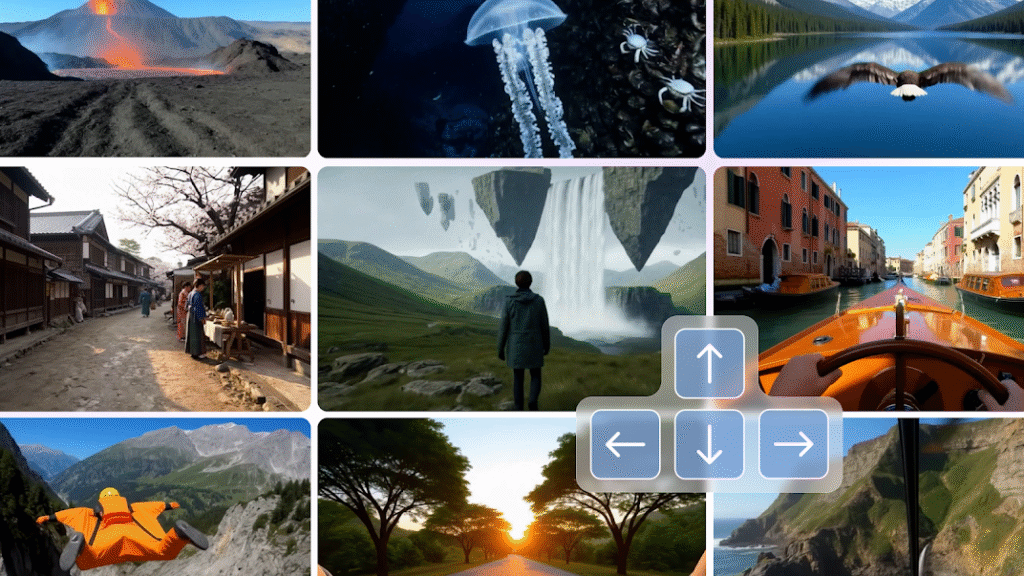

Ever wondered what it would feel like to walk into a world created by AI, change the weather mid‑journey, or add a bird with just a text prompt? That’s the magic behind the new Genie 3 world model from Google DeepMind. In just the first few seconds, you’ll get immersive in gorgeous 720p settings at 24fps. And the real kicker: it remembers what you saw—even if you look away and come back. Whether you’re a tech enthusiast, student, entrepreneur, or just curious about emerging AI, Genie 3 offers a peek into the future of interactive learning, gaming, and robotics.

🧠 What Is Genie 3—and Why It Matters (Genie 3 explained)

Genie 3 is the third iteration of DeepMind’s world models — AI systems capable of generating interactive, explorable 3D environments from simple text or image prompts arXiv+14Ars Technica+14Industry Leaders Magazine+14SiliconANGLE+5Businessworld+5The Guardian+5The Verge.

- Real-Time Interaction: Generate and navigate a world at 720p, 24fps, responding instantly to your commands Ars TechnicaThe Verge.

- Persistent Visual Memory: Objects remain consistent for around one minute, so revisiting a wall, a tree, or text yields the same view Ars Technica+2The Verge+2.

- Promptable World Events: Change weather, add animals, vehicles, or even trigger rain — all on the fly Ars Technica+3The News+3The Verge+3.

- Training AGI Agents: Designed to train embodied AI—robots, agents, self-driving systems—in safe, limitless environments Businessworld+1.

Result: A world model that’s more consistent, interactive, and visually rich than ever before.

What It Isn’t: It’s not yet public—Genie 3 is in a limited research preview, accessible only to select researchers and creators while DeepMind refines safety, ethical use, and technical constraints The NewsThe VergeThe Times of India.

Key takeaway:

Genie 3 isn’t just about creating pretty worlds—it’s about enabling AI agents to learn and train in dynamic, human‑like simulated spaces.

How Genie 3 Builds on Genie 1 & Genie 2

Genie 1 & 2 Recap

- Genie 1 (2024) introduced the idea: generate interactive 2D/game‑like worlds from videos and prompts, learning action control without labeled data THE DECODER+15syncedreview.com+15The News+15arXiv+2sites.google.com+2.

- Genie 2 (December 2024) was a leap. It generated playable 3D environments lasting up to a minute, with keyboard/mouse interaction and long-horizon memory (~10–20 s), from a single image prompt The Times of India+4deepmind.google+4O-mega+4.

What’s New with Genie 3

- Longer visual memory → up to one minute, compared to Genie 2’s few seconds Ars Technica+1.

- Higher fidelity → 720p and 24fps experience—even smoother interaction than before The News+3Ars Technica+3The Verge+3.

- Dynamic prompting in real-time, aka “promptable world events”—weather shifts, new objects, evolving scenes without restarting The News.

Key takeaway

Genie 3 sharpens the core Genie promise: seamless, evolving simulations that the model remembers and adapts to as you explore.

Real‑Life Use Cases (Examples, analogies & benefits)

🚗 Training Autonomous Vehicles

Imagine a car simulator where a pedestrian pops out, or a sudden obstacle appears. Agents trained in Genie 3 can experience edge cases in controlled virtual warehouses or roads — without real-world risk The GuardianBusinessworld.

👩💻 Education, Virtual Walkthroughs & Media

In India, schools or tech colleges could use Genie‑generated labs to visualize biological systems or engineering machines. The worlds adapt in real-time as students input different scenarios—like rainfall or equipment change.

🎮 Rapid Game Prototyping

Designers can spin up a full level in minutes: desert terrain, volcano eruption, NPCs milling around—all controlled with prompts. Then test gameplay without building every asset manually.

🤖 Robotics & Embodied Agents

AI robots like “Sima” (DeepMind’s virtual humanoid) can explore generated environments—learning spatial interactions and planning without needing physical hardware The Guardian.

Real‑world insight

These aren’t just futuristic demos; they mirror how humans learn—by exploring, tweaking and testing hypotheses in a safe sandbox.

Genie 3’s Major Challenges & Limitations

No world is perfect—even one created by AI.

- Memory Span Limit: Visual memory lasts only around one minute—not hours—which limits longer simulations Ars TechnicaThe News.

- Interaction Constraints: Users and agents can only take predefined actions (e.g. keyboard input); gestures or full physics aren’t yet supported.

- Text Generation Gaps: Written text in scenes may only appear if included in prompt; generative text on signs often fails The NewsThe Verge.

- Ethics & Safety: DeepMind is cautious—testing usage limits, bias control, and ensuring worlds aren’t misused The Times of India.

- Not Public Yet: Only a few researchers can use it now. No public API or release date announced The NewsThe Verge.

Quick summary

Genie 3 is powerful—but still early stage. Expect future models to expand memory, controls, graphics and accessibility.

Technical Foundations (Simplified)

DeepMind built Genie models using a blend of advanced AI building blocks:

- Spatiotemporal video tokenizer: Converts frames into discrete tokens capturing both imagery and motion dynamics.

- Latent action model: Learns the link between what happened in one frame and the next without labeled actions TechCrunch+4The Verge+4The News+4blog.lukmaanias.com+4syncedreview.com+4arXiv+4.

- Autoregressive dynamics model: Predicts the next tokens (future frames) based on prior states and user actions.

Think of it like cooking:

- Genie’s tokenizer is the chopped veggies,

- the action model is the recipe steps,

- and the dynamics model is the simmering pot that turns it into a meal—frame by frame.

All trained unsupervised on 11-billion‑parameter architecture, from unlabeled internet videos spanning games and robotics footage syncedreview.comsites.google.com+4arXiv+4blog.lukmaanias.com+4.

Final thought

Genie achieves its magic without labeled datasets—learning from raw video data like a child watching endless gameplay.

Why Genie 3 Helps Build AGI (Artificial General Intelligence)

For many researchers, world models are the next frontier in achieving AGI—a system that can understand and act across diverse tasks.

- DeepMind CEO Demis Hassabis views these models as central to his vision—a way for AI to learn not just from text or static data but via simulated experience The Guardian+2Ars Technica+2THE DECODER.

- A new DeepMind team led by Tim Brooks is focused on scaling real-time simulation tools, integrating Genie with other multimodal models like Gemini and Veo 3 THE DECODER+9The Verge+9techzine.eu+9.

- With unlimited curriculum of different worlds, AI agents can learn planning, reasoning, physical intuition, and adaptability—key AGI components.

Key takeaway

Genie 3 is more than tech—it’s a cornerstone in how AI may learn to think and act like humans, but safely within virtual worlds.

Tips & Insights for Indian Readers & Startups

- Universities & hackathons in India could lobby for access to Genie preview—use it to teach robotics or game design.

- Innovators can script prototypes using Indian landscapes: Himalayan trails, Marwari palaces, Mumbai streets—test cross‑cultural agent behavior.

- Collaboration potential: pair Indian language prompts (Hindi, Telugu) with Genie to craft multilingual simulation rooms.

- Mistakes to avoid:

- Relying solely on Genie-generated worlds without validation.

- Forgetting domain-specific rules—e.g. traffic behavior, cultural realism.

- Relying solely on Genie-generated worlds without validation.

- Real‑user insight: Early testers mention lag when adding complex objects, or seeing inconsistent textures near horizon edges.

One-liner

Though early-stage, Genie 3 offers India’s tech community a playground to experiment with world simulations rooted in local context.

✅ Summary Key Takeaways

- Genie 3 transforms text prompts into interactive, explorable 3D worlds with visual memory and real-time changes.

- Builds on Genie 1 & 2, stacking longer memory (≈1 min), higher resolution (720p), and live event triggering.

- Useful for AI agent training, robotics, game prototyping, and educational simulations.

- Largely experimental—limited access, with memory and interaction constraints.

- Tech stack includes video tokenizer, latent action model, dynamics model, all trained unsupervised on raw video.

Seen as a key stage toward AGI, teaching AI how to act and predict in virtual yet believable worlds.

Who can use Genie 3 currently?

Only selected researchers and creators in a limited preview; no public release yet.

Why is Genie 3 important for AGI?

It enables embodied AI agents to train via simulated experience, fostering planning and adaptation.

What is Genie 3 world model?

Genie 3 is DeepMind’s AI that builds interactive 3D worlds from text or image prompts in real‑time.

How long does Genie 3 remember environments?

Its visual memory lasts about one minute before details may fade or shift.

Can users change weather or add objects in Genie 3?

Yes—promptable world events let you modify scenes (rain, animals, vehicles) on the fly.